A few different people have asked me for my thoughts on Lavender, so here are some quick thoughts, written in haste, and posted before I lose my nerve.

Earlier today, the Tel Aviv-based outlet +972 Magazine published a long exposé on “Lavender,” an artificial intelligence-based program that Israel uses to automatically identify and target suspected Hamas and Palestinian Islamic Jihad fighters. The entire article is worth a read, but it essentially argues that this system generates “kill lists” for military commanders. These kill lists were given only perfunctory screening before the order was signed off on. No underlying intelligence gets examined: the simple officer confirms that the target is male and then signs off on the strike. The target is usually killed at home, with a “dumb” bomb, surrounded by family. And as has been the case during the majority of the war, only limited consideration is given to civilian life.

After the publication of the story, Lavender was quickly metabolized into the neverending AI discourse machine. The system’s high error rate (at least 10%) was pointed to. Questions were raised about the likelihood of bias in the algorithm used to make targeting decisions, as well as the reliability of its underlying dataset. As is often the case with AI discourse, the conversation quickly narrowed to narrowly technical questions of bias, accuracy, and machine ethics.

This isn’t surprising. Since the beginning of the “techlash” a decade ago, technology critics have tended to take a view of technological politics that is both internalist and partial. By this, I mean that the “politics” of a technological artifact are understood to be imbued in the object at its creation—the values are “baked in” to a technology—while the actual conditions of use are often ignored or only gestured at.

As I’ve mentioned in the past, there are historical reasons for the popularity of this perspective, namely that the most recent generation of AI/ML fairness researchers grew up inside of Big Tech. They spent years thinking about how to make these systems fair and unbiased—something the firms assumed would make emerging technologies trustworthy and marketable—but relatively little time thinking about contexts and conditions of use.

Lavender offers a good case study of the limitations of such an approach. We do not, and likely cannot, understand the technical underpinnings of these technologies. It might be a fair assumption that they use a method which descends from mid-2000s network theories about terrorist organizations, but—right now at least—there’s no way to be certain. We don’t have access to the algorithms used to make targeting decisions, and they’re unlikely to be declassified any time soon.

But just because we can’t peek inside the “black box” doesn’t mean we can’t know anything about the technology. As is often the case, the internals are often not determinative or even interesting. Their true destructive potential only emerges through use.

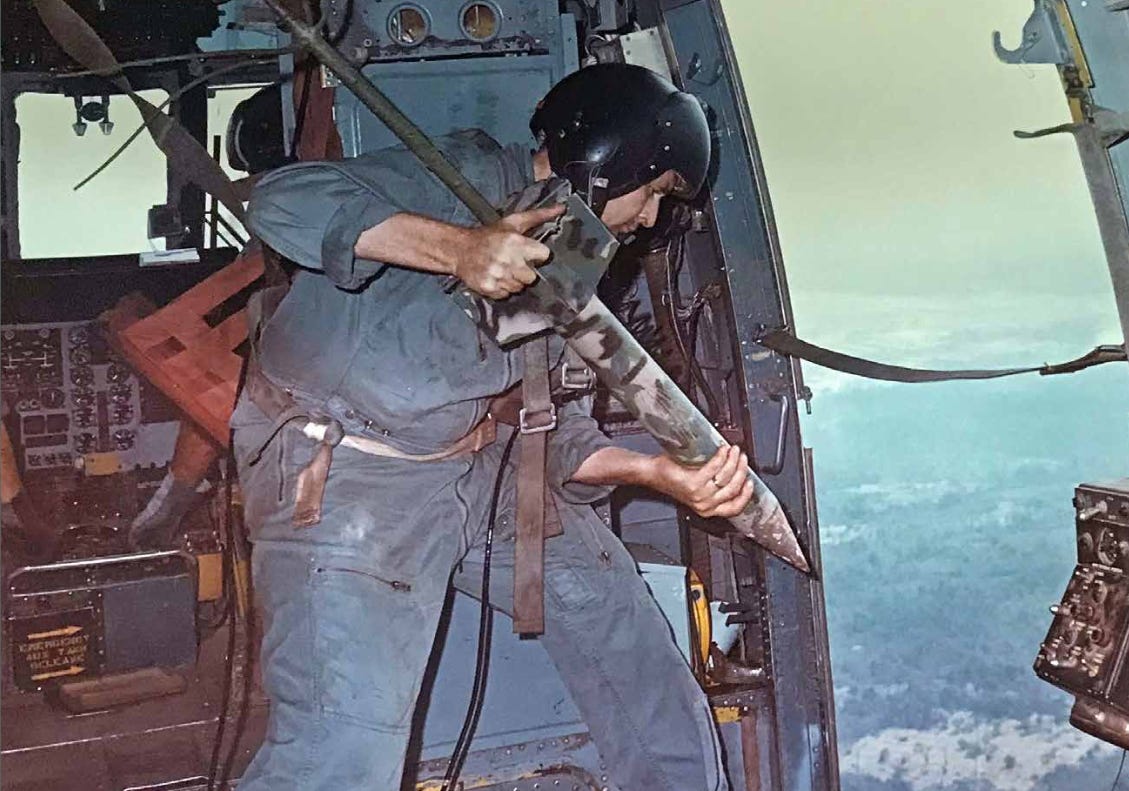

During the Vietnam War, the US Department of Defense launched an operation called “Igloo White.” The program, which might be familiar to you if you’ve read The Closed World or seen the documentary The Fog of War, was intended to disrupt the movement of Northern Vietnamese supplies along the Ho Chi Minh Trail. It did this by scattering the route with a variety of remote sensing devices—acoustic sensors that could pick up engine noise, chemical sensors that could pick up the scent of sweat and urine, etc—this data was fed back to Udorn Air Force Base in Thailand, where it was processed by IBM 360-65. From there, bombing runs were planned.

These bombing runs were not precision strikes. “We are not bombing a precise point on the ground with a point-target bomb,” the officer in charge of the program explained it in a Pentagon 1971 press briefing. “We can’t determine each truck’s location that accurately with ground sensors, which are listening—not viewing—devices. Since we never actually ‘see’ the trucks as point targets, we use area-type ordnance to cover the zone we know the trucks to be in. … [Thus] we have an interdiction system which can hurt the enemy, even when he seeks the cover of foliage, weather, or darkness.”

The problem was that Igloo White didn’t seem to actually hurt the enemy or, at least, it couldn’t be proven to. The CIA thought it was basically useless. North Vietnamese soldiers knew about the sensor network, and quickly came up with a number of ways to make it generate false positives, by hanging buckets of animal urine near the “people sniffing” sensors or by fooling seismic sensors by stampeding animals along the trail. But it did “work” in another way. Like Lavender, it streamlined and automated formerly complicated, contentious targeting decisions. It cut down deliberation time and made decisions feel objective and mechanical, products of cold machine logic. Through the magic of objectivity theater, human subjectivity and human responsibility could be diminished, even though humans were still making these decisions, they were still “in the loop.”

The accuracy, efficiency, and fairness of these systems is not the point, and thus cracking open the black box will tell you very little about how they work. The true process is more straight-forwardly social: they operate through a process I think of as “technological delegation.” They work by mystifying questions of responsibility and agency behind a veil of technology, by subtly changing the subject. Uber did this by selling an unlicensed, unregulated taxi service as an “app.” Google did this when they used to blame the algorithm for search results. They’re machines for hiding behind, instruments of moral and legal arbitrage. As such, the normal forms of AI critique not only misapprehend the problem, but by focusing attention too tightly on technological politics, they actively help to deepen the illusion.

Useful perspective, thank you.

Programs exactly like this also have a predecessor in the NSA's SKYNET, the inner workings of which were arguably even worse. Civilians killed in strikes on suspects were fed into the machine as suspected terrorists; if they bomb a target while he's in a taxi, the deceased taxi driver gets added to the data, and now his family and coworkers are considered new targets.